Quickstart

A minimalistic example: Hello 🔥 API

The best way to start getting familiarised with Flama is by example. Let's start coding the simplest application:

from flama import Flama

app = Flama()

@app.route("/")def home(): return {"message": "Hello 🔥"}The code does the following:

- Imports Flama and creates the instance

appof this class. - Adds the

route()decorator to the user-defined functionhome. This decorator specifies that the functionhomewill be called when a request to the route/is made. This will return the dictionary{"message": "Hello 🔥"}.

You can download this code in your examples folder by running:

cd exampleswget https://raw.githubusercontent.com/vortico/flama/master/examples/hello_flama.pyRunning your App

To run your first Flama application:

flama run examples.hello_flama:app

INFO: Started server process [78260]INFO: Waiting for application startup.INFO: Application startup complete.INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)This command runs the uvicorn web server. If you click now on http://127.0.0.1:8000, you

should see the greeting message {"message": "Hello 🔥"} on your browser.

Alternative running

The previous code can be tuned a bit so that you can run it the same way from your favourite programming IDE:

from flama import Flama

app = Flama()

@app.route("/")def home(): return {"message": "Hello 🔥"}

if __name__ == "__main__": flama.run(flama_app=app, server_host="0.0.0.0", server_port=8000)You can now run the code directly from your IDE, either by clicking on the run button, or by any other key shortcut you prefer, and you will obtain the same result. As of now, we will always include this small modification in our examples, but will always use Flama CLI to run the examples to showcase the conveniences it brings.

What if the server does not start?

There are several reasons why the server might not start after running flama run. If there is another service already using the port 8000 you will see an error like:

flama run examples.hello_flama:app

INFO: Started server process [54203]INFO: Waiting for application startup.INFO: Application startup complete.ERROR: [Errno 48] error while attempting to bind on address ('127.0.0.1', 8000): address already in useINFO: Waiting for application shutdown.INFO: Application shutdown complete.If you get an error similar to:

flama run examples.hello_flama:app

command not found: flamathis means the virtual environment where Flama was installed is not active. Activate the virtual environment (see installation) and try again.

Another possibility is that the application path (i.e. examples.hello_flama:app) is wrong. This is the case when you have an error like:

flama run hello_flama:app

ERROR: Error loading ASGI app. Could not import module "hello_flama".The only way of fixing this is by providing the correct application path. In this example, the path must be examples.hello_flama:app given that we are running Flama CLI at the same level as the folder examples:

examples/└── hello_flama.py └── appAutogenerated API docs

Don't you miss anything in our previous code? Sure, docstrings! We know, they are very important. Let's add it:

from flama import Flama

app = flama.Flama( openapi={ "info": { "title": "Hello-🔥", "version": "1.0", "description": "My first API", }, "tags": [ {"name": "Salute", "description": "This is the salute description"}, ], })

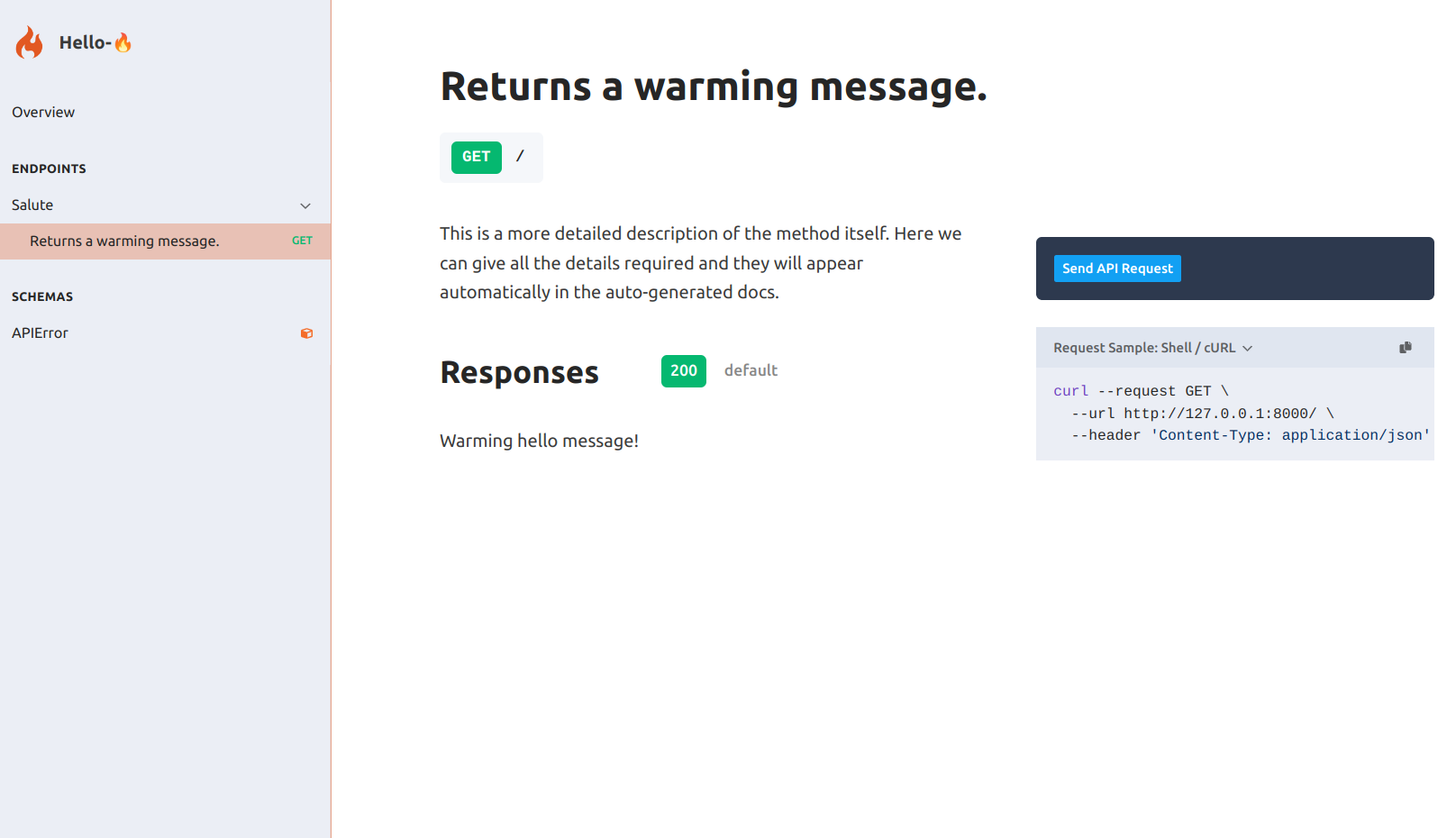

@app.route("/")def home(): """ tags: - Salute summary: Returns a warming message. description: This is a more detailed description of the method itself. Here we can give all the details required and they will appear automatically in the auto-generated docs. responses: 200: description: Warming hello message! """ return {"message": "Hello 🔥"}

if __name__ == "__main__": flama.run(flama_app=app, server_host="0.0.0.0", server_port=8000)We can proceed, and run:

flama run examples.hello_flama:app

INFO: Started server process [3267]INFO: Waiting for application startup.INFO: Application startup complete.INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)This time, let's visit http://127.0.0.1:8000/docs/ and see what's there waiting for us.

Yup, Flama automatically generates the API schema following the OpenAPI standard, and provides a convenient interface to interact with the documentation.

To deep dive into the Flama CLI, please go to the Flama CLI section. If you are more interested in learning how to serve ML models with Flama, you can go and visit section Flama ML API section.