Model components

In the previous section we covered the fundamentals of building custom model resources. Understanding what model resources are, and how to use them, was the first step in building a custom model. We took advantage of the Flama built-in ModelResource to create a custom model resource that incorporated custom methods and exposed them as routes of our API, beyond the standard /predict/ and /inspect/ routes added by default when using Flama CLI, or the syntax app.models.add_model (see the sections about adding models and adding model resources for more details). Without us knowing, when we created our custom model resource, Flama added it as a Component of the application (specifically, as a ModelComponent). But, what is a component? And what is a model component?

Before we dive into the details of the ModelComponent class, let's first understand what a Component and a ModelComponent represent within the context of a Flama application. These are very important concepts, as they will help us understand how to extend the functionality of our application. Once we have a good understanding of these concepts, we will provide an example of how to build a

Flama application that uses a custom model component which incorporates a lazy loading mechanism. The example will show us the power of being able to control the finest details of our models.

-

What is a component? Flama Components are the building blocks of an application. Components are an abstraction which allow us to isolate the logic required to create the objects needed by the application methods exposed as routes. Whenever an application route receives a request, it will call the corresponding method of the application which encapsulates the functionality responsible for yielding the desired response. Such functionality typically requires objects to be created, and these objects are instantiated by the application Components. Thus, Components permits us to isolate the logic that's not directly related to the application's business logic, and to reuse it across different application methods. A natural question at this point could be: "what are these objects that components create?". The answer is: They can be anything! They can be: a query parameter, a body, a database connection, a machine learning model, etc.

-

What is a model component? One of the built-in components of Flama is the ModelComponent. This component is responsible for creating the objects that are required by the application methods in charge of handling the requests to (at least) the /predict/ and /inspect/ routes. This means, whenever a request which requires an ML model is received (e.g., to make a prediction, or to inspect the model), the ModelComponent will be resolving automatically the creation of the corresponding ML model object. If you are asking yourself what a lazy loading mechanism is, here is a brief explanation: A lazy loading mechanism is a mechanism that allows us to delay the creation of an object until it is actually needed. This is useful when we want to avoid the overhead of creating an object that we might For example, if we have a machine learning model that takes a long time to load, we might want to defer the creation of the model until it is actually needed. This way, we can avoid the loading time of the model at the startup, reducing the time it takes for our application to be ready to receive requests.

The ModelComponent class

Preamble

The best way to know how to implement our own ModelComponent is by example, as we've been doing so far in previous sections. With this purpose in mind, we are going to build a Flama application which loads a machine learning model only when the first request to any of the /predict/ or /inspect/ routes is received. To do so, we will need:

-

A custom Model class which wraps the methods of the machine learning model we want to use. In this example, we will implement the predict and inspect methods of the model.

-

A custom ModelComponent class which will be in charge of instantiating our custom Model class, and return it via the resolve method. We need to highlight that the resolve method is the one that is called by the dependency injection of Flama framework, hence its mandatory character, and implementation.

-

A custom ModelResource class which will be in charge of exposing the predict and inspect methods of our custom Model class as routes of our API. This is, in essence, the same class we implemented in the previous section. We will also expose an additional method, as we did in the previous section, which will return some metadata, including the status of the model (whether it is loaded or not).

Model

Let's start implementing the easiest of the ingredients we have mentioned above:

import typingfrom flama.models import Model

class MyCustomModel(Model): def __init__(self, model=None, meta=None, artifacts=None): self.model = model self.meta = meta self.artifacts = artifacts

def inspect(self) -> typing.Any: return self.model.get_params()

def predict(self, x: typing.Any) -> typing.Any: return self.model.predict(x)As we can see, the MyCustomModel class inherits from the Model class, which is a built-in class of Flama. This base class provides the initialisation of the model attribute, and the inspect and predict methods, which will be exposed as routes of our application. If we wanted to instantiate a model object of the class MyCustomModel, we would need to pass the model object as an argument to the constructor, as follows:

model_artifact = flama.load("sklearn_model.flm")model = MyCustomModel(model_artifact.model)ModelComponent

Now that we have the Model class implemented, we need the ModelComponent class. This class will be in charge of instantiating the MyCustomModel class, and returning it via the resolve method. In this particular example, we are going to implement a lazy loading mechanism, which means that the model will be instantiated only when the first request to any of the /predict/ or /inspect/ routes is received. This is different from what was done under the hood by Flama when we used the app.models.add_model or app.models.add_model_resource. In both cases there was a ModelComponent which was created without us knowing, but the instantiation of the model object occurs in the resolve method directly, hence the model is instantiated at the startup of the application. In our case, we want to delay the instantiation of the model until the first relevant request happens. Therefore, the resolve method will not be the one making the call to the constructor of the MyCustomModel class. Instead, we will implement a method called load_model which will be in charge of instantiating the model object. The resolve method will call the load_model method, and return the model object, if the object is not initialised yet. Without further ado, let's implement our ModelComponent class:

from flama.models import ModelComponent

class MyCustomModelComponent(ModelComponent): def __init__(self, model_path: str): self._model_path = model_path self.model = MyCustomModel()

def load(self): load_model = flama.load(self._model_path) self.model = MyCustomModel(load_model.model, load_model.meta, load_model.artifacts)

def reset(self): self.model = MyCustomModel()

def resolve(self) -> MyCustomModel: if not self.model.model: self.load()

assert self.model.model return self.modelIn short, to instantiate an object of the MyCustomModelComponent class, we need to pass the path to the model object as an argument to the constructor. This path will be stored in the self._model_path attribute. The self.model attribute is an instance of the MyCustomModel class, which is initialised with a None value, which makes it lightweight by default. The load_model method is in charge of instantiating the model object, and assigning it to the self.model attribute. We've also added an unload_model method, which is in charge of setting the self.model attribute to a None value, i.e. to reset the model attribute. Finally, the resolve method is in charge of calling the load_model method, if the self.model attribute is not initialised yet, and returning the self.model attribute.

How will we have to initialise the MyCustomModelComponent class? We will have to do it as follows:

component = MyCustomModelComponent("sklearn_model.flm")The object component will be passed to the last of the ingredients we need to implement the ModelComponent, namely the ModelResource class.

ModelResource

We have already implemented the ModelResource class in the previous section, so we will not go into all the details of the implementation. The only difference with the previous section is that we are not going to use the model_path class attribute this time. This class attribute is used to instantiate a ModelComponent object internally. However, we have our own ModelComponent class, so we don't want Flama to create a ModelComponent object for us. Instead, we want to pass our own ModelComponent object to the ModelResource, and this is what we are going to do. Let's see how:

class MyCustomModelResource(BaseModelResource[MyCustomModelComponent]): name = "custom_model" verbose_name = "Lazy-loaded ScikitLearn Model" component = component

info = { "model_version": "1.0.0", "library_version": "1.0.2", }

def _get_metadata(self): return { "metadata": { "built-in": { "verbose_name": self._meta.verbose_name, "name": self._meta.name, }, "custom": { **self.info, "loaded": self.component.model.model is not None, "date": datetime.now().date(), "time": datetime.now().time(), }, } }

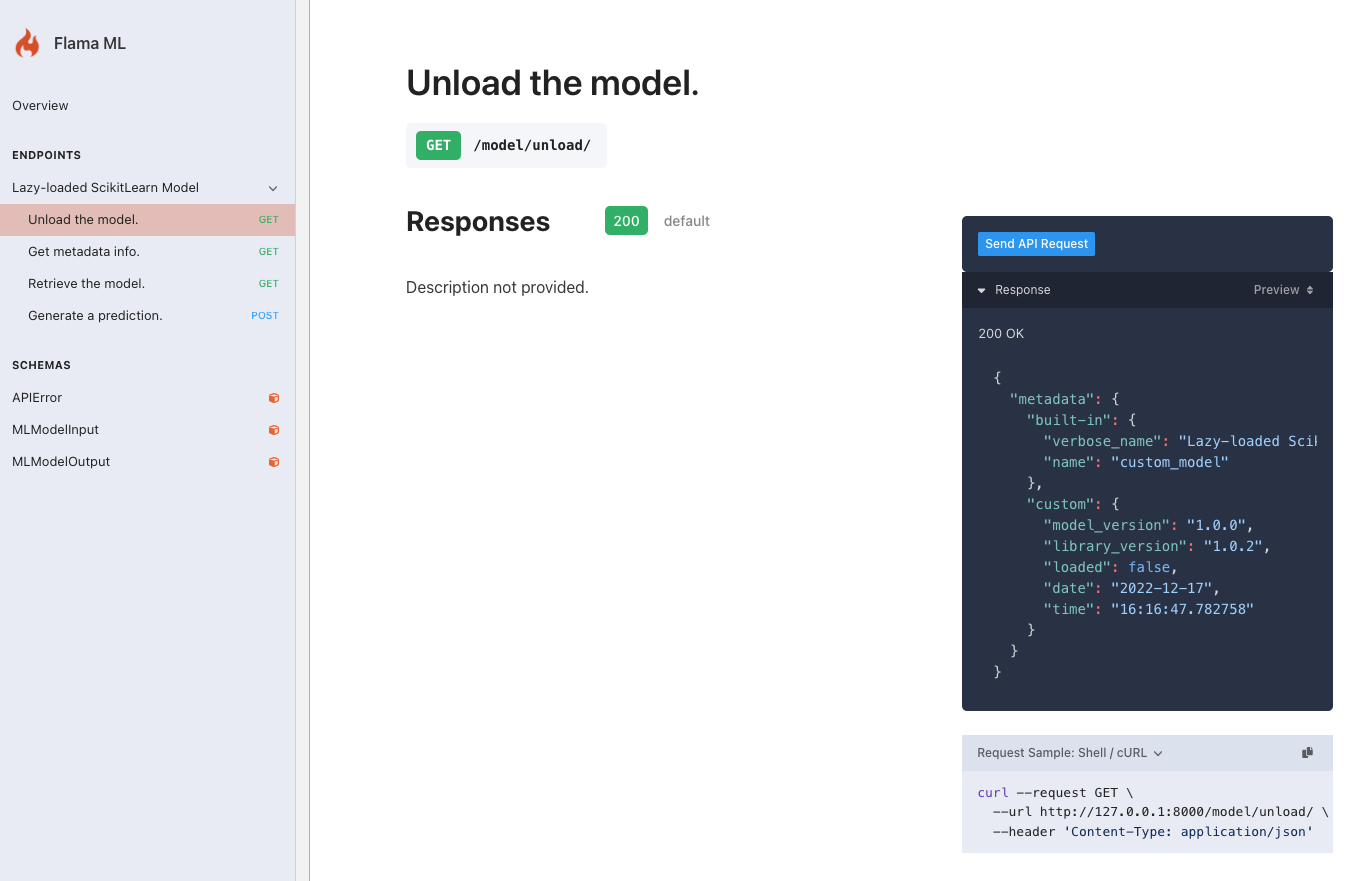

@resource_method("/unload/", methods=["GET"], name="unload-method") def unload(self): """ tags: - Lazy-loaded ScikitLearn Model summary: Unload the model. """ self.component.reset() return self._get_metadata()

@resource_method("/metadata/", methods=["GET"], name="metadata-method") def metadata(self): """ tags: - Lazy-loaded ScikitLearn Model summary: Get metadata info. """ return self._get_metadata()As we can see, the only difference with the implementation we saw in the previous section is that we are using the class attribute component, which we are setting to the component object we instantiated earlier. Obviously, we are also adding the unload method, which is in charge of calling the unload_model method of the MyCustomModelComponent class. The metadata method is also different, as it is now returning the loaded attribute, which is a boolean value indicating whether the model is loaded or not. The loaded attribute is obtained by checking whether the self.component.model.model attribute is None or not. If it is None, then the model is not loaded, and if it is not None, then the model is loaded. This will be useful for us to check whether the lazy loading mechanism is working as expected. Finally, we could avoid the instantiation of the component object by calling the MyCustomModelComponent class directly in the component class attribute. This would look something like this:

class MyCustomModelResource(BaseModelResource[MyCustomModelComponent]): name = "custom_model" verbose_name = "Lazy-loaded ScikitLearn Model" component = MyCustomModelComponent("sklearn_model.flm")

# rest of the code ...Adding the component

Now that we have implemented the ModelComponent and the ModelResource, we need to add our custom model component to our application. As we can see below, the procedure is quite similar to the way in which we added a model resource in the previous section:

app = Flama( openapi={ "info": { "title": "Flama ML", "version": "0.1.0", "description": "Machine learning API using Flama 🔥", } }, docs="/docs/",)

app.add_component(component)app.models.add_model_resource(path="/model", resource=MyCustomModelResource)This is the most verbose way of adding it. We could also add it by using the component argument of the

Flama class constructor:

app = Flama( openapi={ "info": { "title": "Flama ML", "version": "0.1.0", "description": "Machine learning API using Flama 🔥", } }, docs="/docs/", components=[component])

app.models.add_model_resource(path="/model", resource=MyCustomModelResource)Run

Putting everything together, we have the following code:

import typingfrom datetime import datetime

import flamafrom flama import Flamafrom flama.models import BaseModelResource, ModelComponentfrom flama.models.base import Modelfrom flama.resources import resource_method

class MyCustomModel(Model): def __init__(self, model=None, meta=None, artifacts=None): self.model = model self.meta = meta self.artifacts = artifacts

def inspect(self) -> typing.Any: return self.model.get_params()

def predict(self, x: typing.Any) -> typing.Any: return self.model.predict(x)

class MyCustomModelComponent(ModelComponent): def __init__(self, model_path: str): self._model_path = model_path self.model = MyCustomModel()

def load(self): load_model = flama.load(self._model_path) self.model = MyCustomModel(load_model.model, load_model.meta, load_model.artifacts)

def reset(self): self.model = MyCustomModel()

def resolve(self) -> MyCustomModel: if not self.model.model: self.load()

assert self.model.model return self.model

component = MyCustomModelComponent("sklearn_model.flm")

class MyCustomModelResource(BaseModelResource[MyCustomModelComponent]): name = "custom_model" verbose_name = "Lazy-loaded ScikitLearn Model" component = component

info = { "model_version": "1.0.0", "library_version": "1.0.2", }

def _get_metadata(self): return { "metadata": { "built-in": { "verbose_name": self._meta.verbose_name, "name": self._meta.name, }, "custom": { **self.info, "loaded": self.component.model.model is not None, "date": datetime.now().date(), "time": datetime.now().time(), }, } }

@resource_method("/unload/", methods=["GET"], name="unload-method") def unload(self): """ tags: - Lazy-loaded ScikitLearn Model summary: Unload the model. """ self.component.reset() return self._get_metadata()

@resource_method("/metadata/", methods=["GET"], name="metadata-method") def metadata(self): """ tags: - Lazy-loaded ScikitLearn Model summary: Get metadata info. """ return self._get_metadata()

app = Flama( openapi={ "info": { "title": "Flama ML", "version": "0.1.0", "description": "Machine learning API using Flama 🔥", } }, docs="/docs/", components=[component],)

app.models.add_model_resource(path="/model", resource=MyCustomModelResource)

if __name__ == "__main__": flama.run(flama_app="__main__:app", server_host="0.0.0.0", server_port=8080, server_reload=True)Now we only have to run the application, and we will be able to test our custom model component. As always, the best way to check that everything is working as expected is by navigating to the /docs/ endpoint. This will show you the documentation of the app:

Testing the lazy loading mechanism

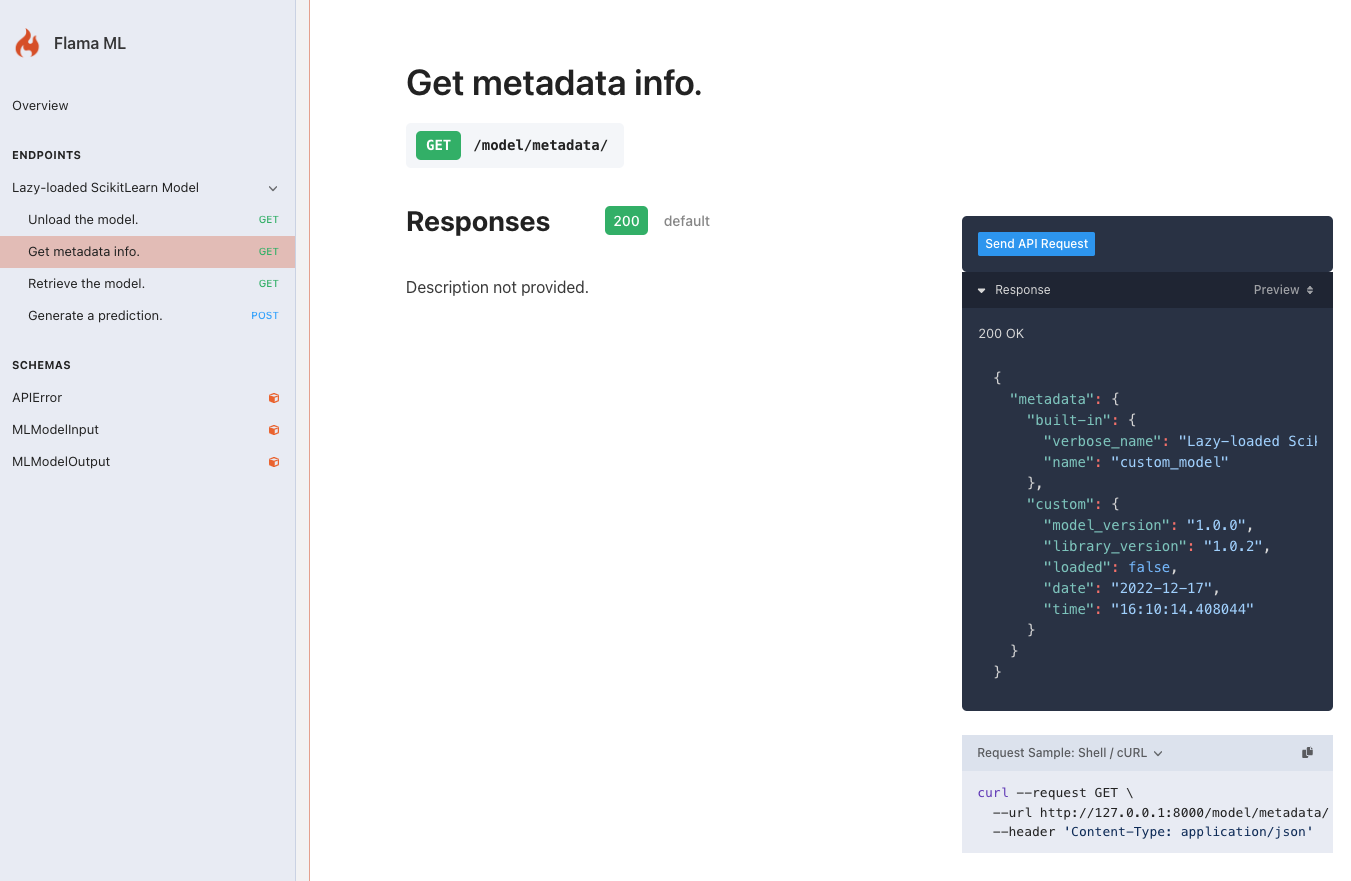

To check that the lazy loading mechanism is working as expected, we can navigate to the section Get metadata info, and click on Send API Request. This will return the following response:

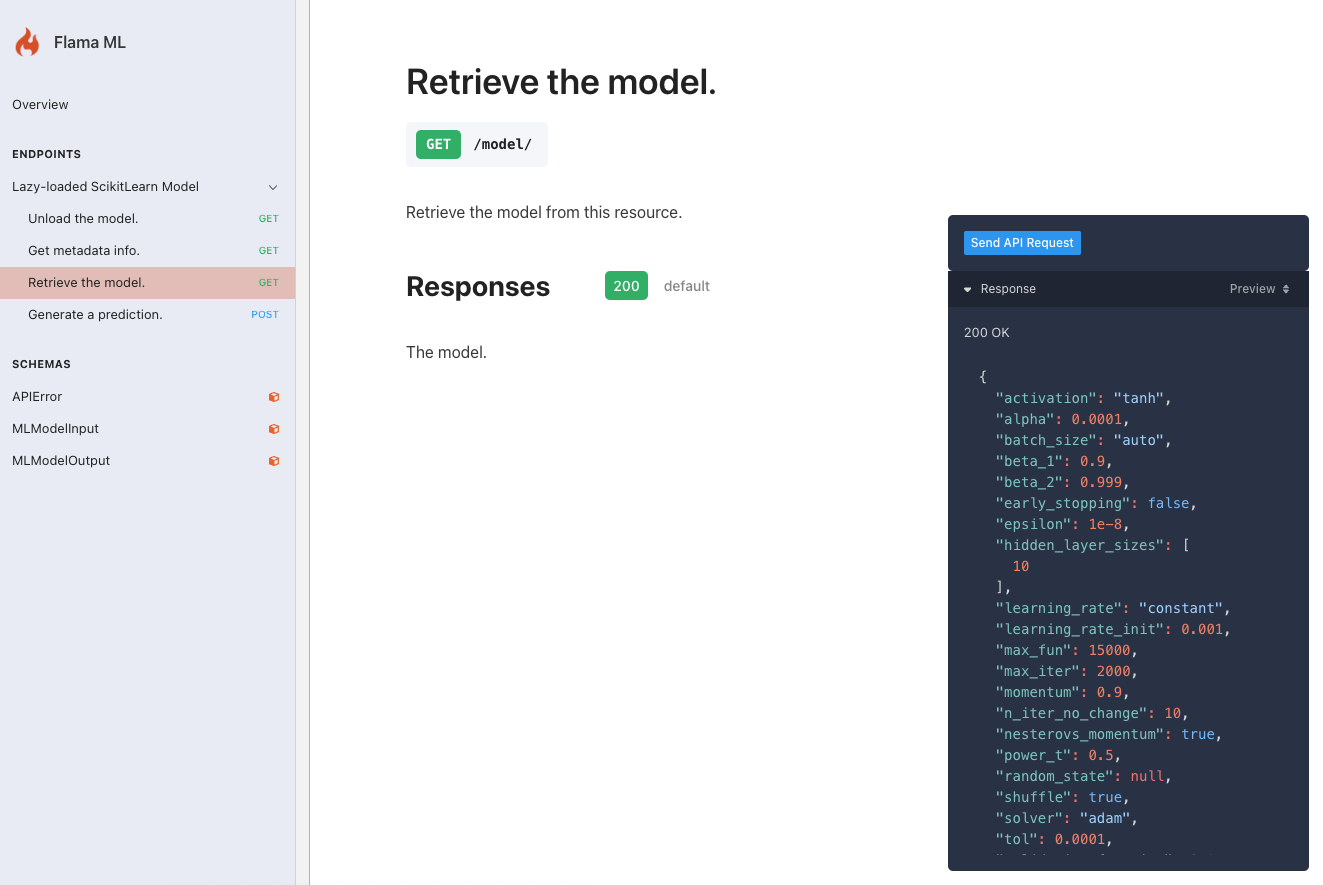

As can be seen, the loaded attribute is false, which means that the model is not loaded. Now, we can navigate to the section Retrieve the model section (which calls the /inspect/ endpoint), and click on Send API Request. This will return the following response:

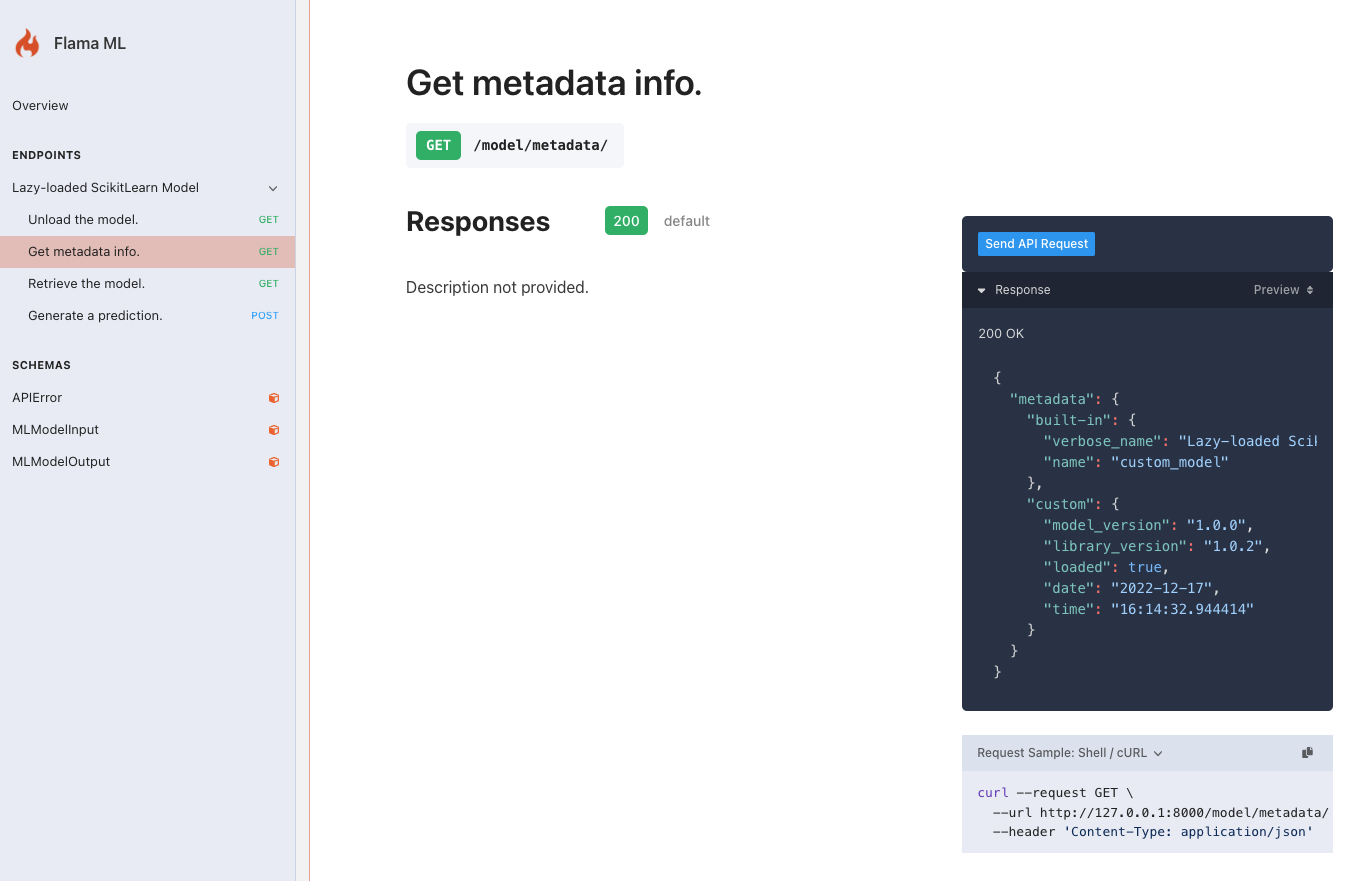

If we navigate again to the section Get metadata info, and click on Send API Request, we will see that the loaded attribute is now true. This means that the model has been loaded:

Finally, we can navigate to the section Unload the model, and click on Send API Request. This will return the following response:

which informs us immediately that the model has been unloaded.

🥳 Hurrah! Everything is working as expected. We have successfully added a custom model component to our application.

Examples

So far, we have seen all the necessary steps to add a custom model resource to our app. In particular a ScikitLearn model component. However, we have not shown how to implement a PyTorch or TensorFlow component. Actually, the steps are exactly the same for all.

In the following, we show the template code related to the implementation of a custom model component for the different libraries mentioned above. As customary already, we will be using the example FLM files generated in the previous section, namely:

In this case, the change required to use one or another library is minimal. In particular, we only need to change the class attribute component.

PyTorch

component = MyCustomModelComponent("pytorch_model.flm")

class MyModelResource(BaseModelResource[MyCustomModelComponent]): name = "custom_model" verbose_name = "Lazy-loaded PyTorch Model" component = component

# ...Scikit-learn

component = MyCustomModelComponent("sklearn_model.flm")

class MyModelResource(BaseModelResource[MyCustomModelComponent]): name = "custom_model" verbose_name = "Lazy-loaded ScikitLearn Model" component = component

# ...TensorFlow

component = MyCustomModelComponent("tensorflow_model.flm")

class MyModelResource(BaseModelResource[MyCustomModelComponent]): name = "custom_model" verbose_name = "Lazy-loaded TensorFlow Model" component = component

# ...Conclusion

We have seen how to add a custom model component to our application. In particular, we have seen how to implement model component which loads the model lazily. This is a very useful feature, as it allows us to save memory and resources when the application is not being used. This has allowed us to create a completely customised machine learning API using Flama. With this, we hope you have reached a good understanding of how to use Flama to create your own machine learning API, and how to add custom components to it, which will be necessary in your projects very likely. Feel free to use the code provided in this tutorial as a starting point for your own projects, and to modify it as you see fit. We hope you become a Flama ambassador, and that you share your experience with us. We will be very happy to hear form your experience with Flama.